TensorWave Is Poised to Be AMD's Loudest Advocate

Last week, TensorWave received its first shipments of racks powered by AMD's MI355X GPUs. It could be a crucial step toward AMD's goal of rivaling NVIDIA's GPU dominance.

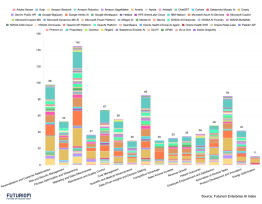

TensorWave is one of a few clouds, including Crusoe and Vultr, declaring they'll be among the earliest to offer services on the MI355X. TensorWave, though, is an all-AMD cloud, the only one of its kind. Naturally, AMD is an ally; it's invested in TensorWave and included the company in the MI350 launch in June, along with Meta and OpenAI.

The company already operates an 8,192-GPU cluster in Arizona, built on AMD's MI325X GPUs. Last October, TensorWave also signed a contract with datacenter operator Tecfusions for 1GW of capacity.

NVIDIA Alternative

NVIDIA can offer vertically integrated racks and entire datacenters, and it operates a public cloud of its own. That level of integration has allowed AI infrastructure to proliferate quickly, but it also primes the AI ecosystem to worry about lock-in. Hence, cloud providers like Vultr are eager to offer the AMD ecosystem as an alternative (AMD has invested in Vultr as well).

Building an all-AMD cloud is an extreme reaction but makes sense considering TensorWave's history.

TensorWave co-founder and chief growth officer Jeff Tatarchuk was a founder of VMAccel, an FPGA cloud service based in Cheyenne, Wyoming. Darrick Horton, now TensorWave's CEO, was VMAccel's CTO and later chief executive. VMAccel's infrastructure was based on Xilinx FPGAs, so when AMD acquired Xilinx in 2022, the team had an "in" with AMD.

NVIDIA already dominated the AI landscape by 2023, and its CUDA framework—primarily the trove of tools and libraries for interacting with the GPU—was seen as the moat protecting that market. So, the heart of TensorWave's mission is not just to float AMD as a chip alternative, but also to prove to developers that alternatives to CUDA are viable.

To nail that point home, TensorWave hosted its own guerrilla session, co-sponsored by AMD, outside NVIDIA's 2025 conference in March. Called Beyond CUDA, the half-day conference convened startups, researchers, and chip experts to state the case that while CUDA democratized AI, it now risks becoming a monopoly.

The Money: Equity and Debt

TensorWave's outside funding started in October 2024 with a $43 million Simple Agreement for Future Equity (SAFE) round led by Nexus Venture Partners and including AMD. SAFE is a seed funding mechanism that delays the investors' equity rights until a triggering event occurs.

That was followed in May 2025 with a $100 million Series A led by hedge fund Magnetar, which is also a major backer of CoreWeave pre-IPO.

Under Magnatar's guidance, both CoreWeave and TensorWave are funding growth through delayed draw term loans (DDTL), a debt financing method used in industrial real estate. A DDTL gives the borrower access to capital upon reaching specified milestones. GPU inventories and long-term contracts—which are the bulk of TensorWave's business, according to co-founder and president Piotr Tomasik—provide the collateral. TensorWave has completed an $800 million tranche of a DDTL to finance further AMD buildouts, Tomasik said in a briefing.

Note that TensorWave's DDTLs are not the same magnitude as CoreWeave's, which involve three loans totaling billions of dollars each.

AMD's Tech Arguments

TensorWave's existence is predicated on AMD being a good alternative to NVIDIA. As noted above, it also means breaking customers out of the CUDA ecosystem. Newer AI entrants are emerging as prime candidates, he said, because their work isn't already rooted in CUDA. Often, they use PyTorch wrappers, meaning their code can run on AMD's chips just as easily, Tomasik said.

On the hardware side, Tomasik points to AMD's memory roadmap as an attraction. The MI300 debuted with 1.5TB of HBM3 memory, roughly 2.4 times more dense than NVIDIA's H100, and subsequent chips have maintained the advantage, Tomasik said. It means AMD's chips can run larger models on fewer GPUs.

AMDs chips also divide up more easily for virtualization, he said. That makes it easier for TensorWave to pool unused GPUs for inferencing, squeezing more revenue out of its infrastructure.

TensorWave is taking on an underdog's role, certainly, but at a time when we're questioning how neoclouds will differentiate in the long run, it at least has an angle. And Tomasik sees signs that the market is ready for an NVIDIA alternative. "The lanes are opening up on the highway," he said.

Futuriom Take: TensorWave has found a marketable niche in the crowded neocloud field. It is not the only cloud offering large AMD clusters, but it is poised to serve as both proof point and laboratory for the customers considering GPU alternatives.