VDURA Shows Off AI Data Functionality in AMD Blueprint

VDURA, a storage and data management provider with HPC credentials, has optimized its product to fit AI pipelines. And to prove its value, the company has released an updated, tested, and validated reference architecture pairing its V5000 platform with AMD Instinct MI300 GPUs. The design has been adopted by a U.S. federal systems integrator for use in an AI supercluster, VDURA said.

The news is significant on a couple of levels. First, it demonstrates the trend among established storage and data management players toward evolving to fit the rigors of AI. Second, the blueprint highlights the capabilities of alternative approaches to NVIDIA’s, which are enjoying a rise in popularity.

VDURA (a verbal mashup of “velocity” and “durability”) emerged in 2023 as a redefined iteration of Panasas, the vendor whose PanFS parallel file system featured storage media optimization combined with data management and protection for HPC environments. Under the leadership of new CEO Ken Claffey (ex-Seagate, Xyratex, and Adaptec), the company shifted to a microservices architecture and completely reworked its platform to fit AI.

Optimized Power and Cost

How VDURA has fit its technology to AI workloads is laid out clearly in the new blueprint, which specifies how enterprises can ensure that storage in a GPU network facilitates optimal use of resources, including power and processing. A central concept is intelligent real-time data tiering, a specialty of VDURA’s.

Throughout the various stages in the AI pipeline, starting with data collection and moving through training to inference, storage needs differ, according to VDURA. “Each one of these stages requires different storage infrastructure requirements,” said Matt Swalley, senior director of marketing, in a recent conference presentation. "Some of them need very fast speeds, some of them you have to hold data for long-term retention. They have different demands … on reads and writes.”

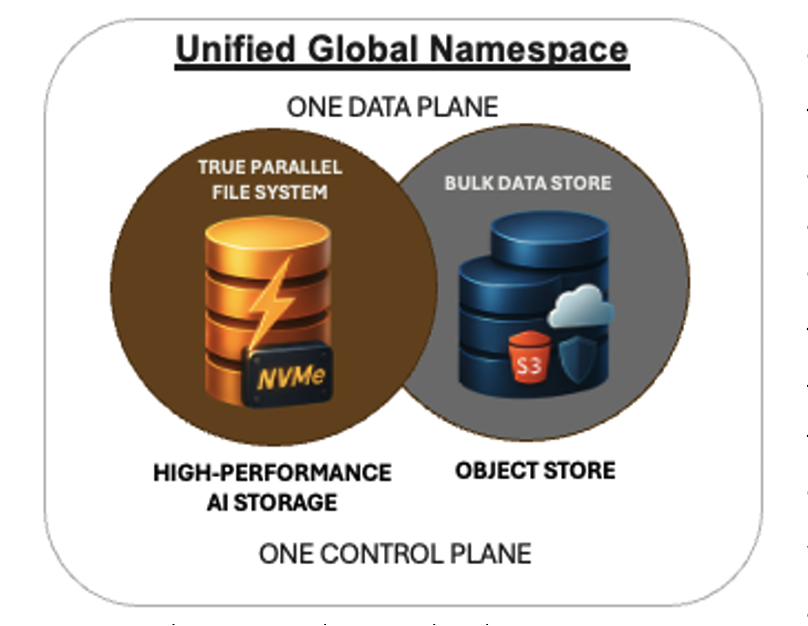

VDURA’s architecture allows data to be shifted in real time from all-flash to hybrid flash and hard disk drive storage according to the specific stage in the AI pipeline. All data is managed via a single namespace and protected by erasure coding.

The AMD Proving Ground

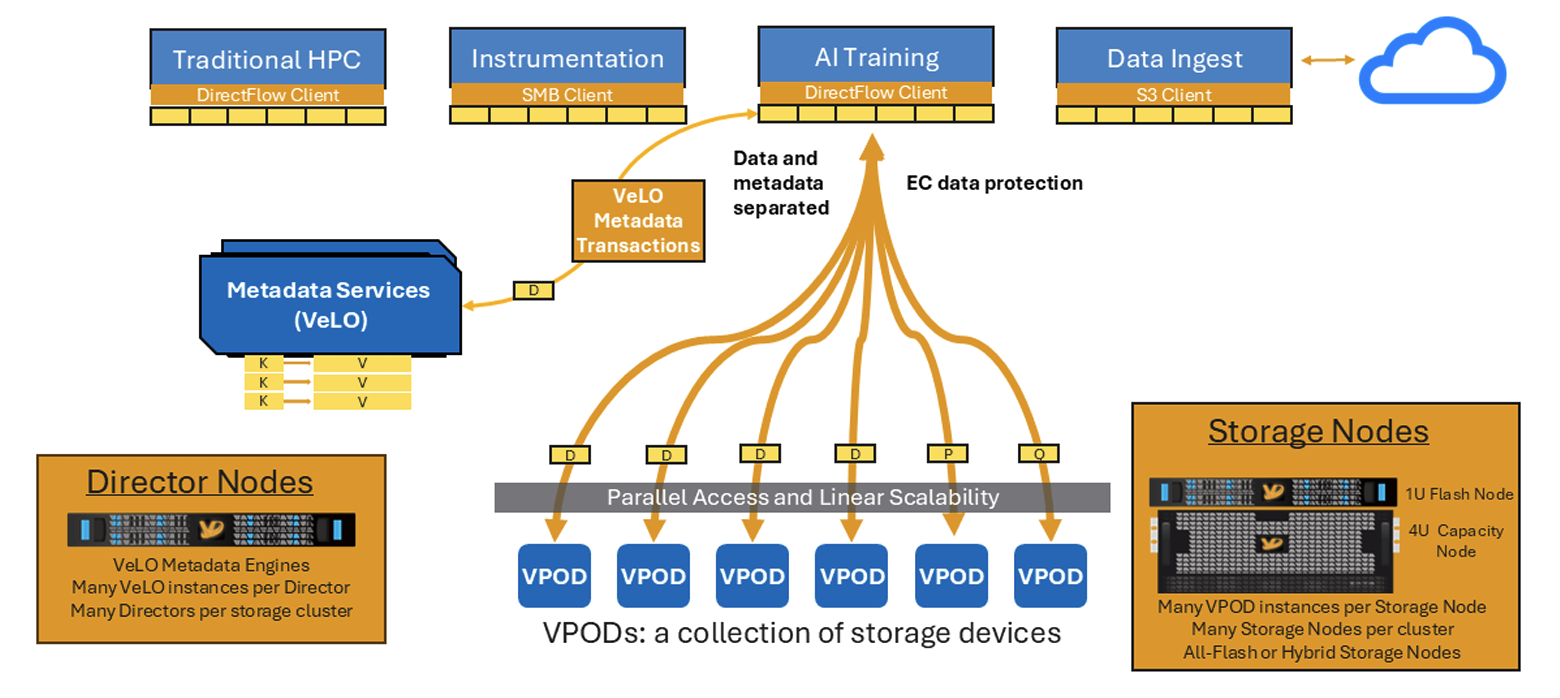

VDURA’s new reference design also highlights its architecture, which combines its parallel file system with object storage. In the VDURA Data Platform, control and data planes are separated. Director Nodes govern the control plane, orchestrating and managing metadata operations and coordinating Storage Nodes and a DirectFlow Client, a Linux-compatible driver for the vendor’s parallel file system.

VDURA Data Platform concepts. Source: VDURA

Storage Nodes come in configurations of either all-NVMe flash or NVMe flash with HDD capacity expansion to help preserve energy. Each storage node includes Virtual Protected Object Devices (VPODs), which increase the granularity of data movement.

VDURA Data Platform architecture. Source: VDURA

The AMD blueprint includes VDURA V5000 Storage Nodes, AMD Instinct GPUs (including the MI300X and MI325X) linked with AMD’s Infinity Fabric interconnect, the VDURA DirectFlow Client on Linux OS, and 400-Gb/s Ethernet switches linked to smart NICs from NVIDIA.

As noted, this reference architecture highlights VDURA’s interest in supporting AMD, whose GPUs it says are becoming more competitive with NVIDIA’s (which VDURA also supports). And the blueprint’s been in the works for a while: It first debuted in May 2025 and has gone through a few iterations, including reviews by AMD. This announcement is the first VDURA’s officially made.

VDURA’s Market Presence

The release of the AMD reference architecture and its adoption by a government SI also points to the growing popularity of VDURA in a market dominated by the likes of DDN, VAST Data, and WEKA, which all couple storage technology with complex data management. VDURA is banking on its cost effectiveness and storage media flexibility to help it win against competitors like these.

This approach appears to be working. VDURA claims to have won bids for HPC and AI data management at the Center for Scientific Computing at Goethe University in Frankfurt, Germany; the High Performance Computing Center at Santa Clara University; and the Hormel Institute for biomedical research at the University of Minnesota, among others.

The vendor also has a partnership with New Mexico State University to develop post-quantum cryptography to protect AI and HPC data pipelines.

Futuriom Take: VDURA’s reference architecture with AMD highlights how HPC storage and data management platforms are adapting to fit AI pipelines. Its support of AMD is evidence of the growing demand for alternatives to NVIDIA.