Dell Expands Its Role as an AI Data Platform

Dell this week announced updates to the Dell AI Data Platform, the subset of the Dell AI Factory that deals with storage and data handling.

Dell is best known for the storage part of that, where updates included integration with NVIDIA’s GB200 and GB300 NVL72 rack-scale offerings.

What’s arguably more intriguing, though, is the way Dell is fleshing out the data side of the AI Data Platform—functions including search and analytics. Combining this with storage, you can think of the AI Data Platform as a data substrate, creating and maintaining the information pool that AI models draw from.

It taps into the wider trend of vendors doing more and more integration, serving up large portions of the AI datacenter as single products—a trend we noted from the recent Open Compute Project Summit.

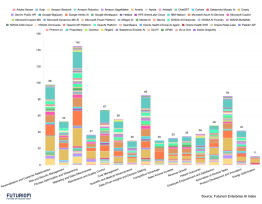

Dell’s announcements also show how the AI Data Platform is in competition with storage vendors—or companies perceived as storage vendors—that are extending into data management. Specifically, Dell wants to call out its differences from VAST Data and Pure Storage.

Search, Analytics, and Agents

Dell’s new announcements on the data side included:

- The Data Search Engine, developed with Elastic, to let customers use natural language to query data sources. This is driven by the integration of the Elasticsearch search and analytics engine into the Dell AI Platform. It allows for searching across all formats of data, including unstructured data such as documents and images.

- The Data Analytics Engine for querying data across all sources, regardless of locations. The technology here comes from Starburst, an 8-year-old startup.

- Data Analytics Engine Agentic Layer, which includes an MCP server to enable multi-agent workflows.

- Integration with NVIDIA’s cuVS, which is an open-source library for GPU-accelerated vector search. CuVS augments existing vector databases and can speed up retrieval-augmented generation (RAG), making that process more turnkey.

Integration and Openness

The big picture behind the Dell AI Data Plaform is to market Dell’s integration abilities to customers that need them—but also to allow openness for the customers that want to insert certain pieces themselves. Elasticsearch isn’t a strict requirement for the platform, for example; customers can bring their own vector databases.

At the same time, Dell must fend off competition coming from outside the traditional server and storage worlds—unconventional rivals, specifically VAST Data.

Pure Storage is in that bucket as well, but VAST is particularly ambitious. Its self-described role as the “operating system” for AI deployments will overlap multiple types of incumbents, not just storage players. It certainly brushes up against Dell’s plans to serve as a data platform.

In the spirit of openness, Dell acts as an OEM to VAST. Dell was even an early VAST investor. But Dell contrasts its approach — open components, many partners — with the more locked-in approaches of VAST and Pure.

All three companies and their peers recognize that AI seems primed for a wave of turnkey solutions. Some customers are inexperienced at datacenter building. Governments are seeking sovereign clouds. Some enterprises are in this boat, too; they’re interested in private-cloud AI for reasons of privacy and control, but they no longer have datacenter staff, having ceded “datacenter” work to the public clouds.

Even the experienced AI datacenter builders—the neoclouds—seem interested in integrated products as a way to accelerate deployments. Dell wants to be on top of all these trends, and that means gathering expertise in the data side of datacenters.