AI Changed the Datacenter Rules, as Seen at OCP Summit

The Open Compute Project (OCP) has focused on AI for some time now—who hasn’t?—but this week’s OCP Global Summit marks a new phase for the organization as it accommodates the new type of datacenter that AI has wrought.

The Open Systems for AI initiative, announced this week, is not just AI branding. NVIDIA’s AI factory revolution, led by the neoclouds, has written new rules for AI infrastructure. The norms include an obsession with fast deployment and a need to support power-hungry racks of GPUs.

Those changes could be seen on the Summit’s expo floor this week in San Jose, California, where modular datacenters and 800-volt power distribution were among the spotlighted topics.

The New AI Reality

Once upon a time, Meta (then Facebook) initiated OCP as a way to apply open-source discussion and methods to hyperscaler hardware. It was datacenter focused but spread into related areas including semiconductors and distributed edge computing.

AI, though, has set new parameters related to power, computing, telemetry (think building environments), and even mechanical factors such as aisle widths and the weight-bearing capacity of flooring. Additionally, the race to deploy GPU farms means there’s a market for being able to stand up datacenters and campuses in less than a year—breakneck speed by traditional datacenter standards.

“Maybe now, we don’t have enough flexibility” for those requirements, said Cliff Grossner, OCP’s chief innovation officer.

Because they've moved so fast, neoclouds haven’t put effort into things like commonality and standardization. This is where OCP says it can help. The group doesn’t do formal standards, but it serves as a consensus-building space, a forum for pre-standards discussions, Grossner said.

Open Systems for AI is OCP’s new umbrella initiative for IT and physical infrastructure and possibly even the interface to the power grid. It’s launching with two projects: one on coolant distribution units (CDUs) for liquid cooling, and one on facilities-level power distribution.

Some recent member contributions fall into the Open Systems for AI purview. They include the Deschutes CDU (a fifth-generation design contributed by Google) and the Clemento compute shelf for two NVIDIA GB300s, contributed by Meta.

Another new AI-related project, Ethernet for Scale-Up Networking (ESUN), will examine networking issues. Grossner notes OCP is keeping in touch with the Ultra Accelerator Link (UALink) and Ultra Ethernet Consortium (UEC) organizations, to avoid duplicating work.

Datacenter-As-Product

Neoclouds move quickly, even when it comes to building datacenters from the ground up, thanks to the use of modular designs. Crusoe, for example, has deployed modular datacenters since its days in cryptocurrency. The modular idea has been around for a long time, and it's enjoying renewed attention thanks to AI.

Modularity, in this case, means a vendor can snap together pieces—compute, networking, cooling, power systems, and so on—to produce racks, pods, or complete, prefab datacenters.

This week, Supermicro launched these capabilities under the product name Data Center Building Block Solutions. Flex did the same, referring to its portfolio more generically as an AI infrastructure platform. Flex’s OCP booth also included a demo of a 1MW rack; more on that in a second.

The end goal is to treat the entire datacenter as a “product” orderable from one vendor. Offering the full datacenter will take time—power systems are a difficult specialty to tackle, Supermicro notes—but providing fully integrated racks and rows still speeds deployment considerably.

It's also useful for customers lacking in datacenter expertise, such as governments building sovereign clouds, Supermicro officials said during an offsite tour that coincided with OCP.

The vertical integration does create potential for lock-in, but it also provides one point of contact for the many vendor partners that get integrated into that datacenter. For customers striving for speed, it’s a worthy tradeoff.

Vendors including Dell, HPE, and NVIDIA itself have begun offering rack-scale and datacenter-scale products as well. If all goes as planned, cloud builders (both neoclouds and conventional hyperscalers like AWS) will have an avenue toward faster construction. They still need to secure the necessary power, of course, but that's a separate issue.

The March to 800V

Power per rack has blown past datacenter norms. NVIDIA’s Kyber rack, designed to run 576 Rubin Ultra GPUs and due to arrive in 2027, will require 600kW of power initially. Later designs will scale to 1MW per rack.

That’s created a push for 800V DC in the datacenter, going beyond the 400V work that AI was already driving. For perspective, the datacenter norm is 48V, and NVIDIA cites AI factories running on 54V.

Among neoclouds, NVIDIA says CoreWeave, Lambda, Nebius, Oracle Cloud Infrastructure and Together AI are designing for 800V datacenters. The company adds that Foxconn is designing a new 40MW datacenter with 800V distribution.

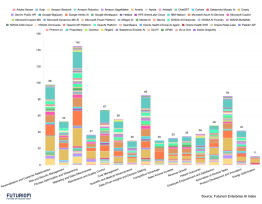

NVIDIA says more than 20 of its partners at OCP showed gear supporting 800V. The list includes well known datacenter infrastructure names including Delta, Eaton, Schneider Electric, and Siemens. More than half the list consists of power-semiconductor vendors such as Infinion, onsemi, STMicroelectronics and Texas Instruments.

At the Summit, HPE announced product support for Kyber, and Vertiv released an 800V reference architecture including power distribution and cooling.

Additionally, Flex showed a 1 MW rack at the Summit. Tying in with the modular trend noted above, it’s a prefab, fully integrated rack, meant to speed deployment times. For liquid cooling, the rack uses modular coolant distribution units (CDU) that sit at the end of the row. Using two to six of them, Flex says it can get up to 1.8MW of cooling power. The integrated nature of the rack means Flex can keep tabs on exactly how much power is being drawn and how much cooling is required.

From Chips to Racks

AI is pushing changes even down at the chip level. Startups such as Cerebras and Groq designed their own silicon for AI, reasoning that the radically different nature of the computing required a rethink of processors and accelerators. It's hard to build an AI market on chips alone, though, so both companies are running their own clouds that run on their accelerators.

That brings us to d-Matrix. Founded in 2019, the startup developed its Digital In-Memory Compute (DIMC) chip architecture for AI inferencing. Tapping into that theme of chip vendors going "up the stack" to rack-scale and beyond, d-Matrix was at OCP showing off SquadRack, its new rack-scale product built with partners Supermicro, Broadcom, and Arista.

Separately, AMD showed its own rack-level product, Helios, in a “static display” at the Summit. First announced in June, Helios is built around the upcoming Instinct MI400 series GPUs and is targeted for 2026 launch. Helios is a necessary step for AMD to be a credible rival to NVIDIA's full-rack products such as the GB200 NVL72.