NVIDIA Takes AI Supercomputer to the Cloud

NVIDIA (Nasdaq: NVDA) today unveiled a series of products targeted at artificial intelligence (AI) applications, including DGX Cloud, a cloud-hosted supercomputing service.

“We are at the iPhone moment of AI. Startups are racing to build disruptive products and business models, and incumbents are looking to respond,” said Jensen Huang, founder and CEO of NVIDIA, in a keynote address for the company’s GTC virtual conference, which runs this week. “DGX Cloud gives customers instant access to NVIDIA AI supercomputing in global-scale clouds.”

Sporting his trademark black leather jacket, Huang stressed the importance of generative AI across multiple industries, pointing out that DGX Cloud provides access to resources that otherwise are tough for enterprise customers to gain access to.

Oracle Cloud Infrastructure Is Up First

DGX Cloud’s first host of record is Oracle (NYSE: ORCL), which is running a supercomputing setup on Oracle Cloud Infrastructure (OCI). DGX Cloud will be launched on Microsoft (Nasdaq: MSFT) Azure next quarter, and Google Cloud Platform (GCP) is set to follow at an unspecified date, NVIDIA said. AWS was not mentioned, though NVIDIA has an alliance with parent Amazon (Nasdaq: AMZN) to track and orchestrate warehouse robots via NVIDIA’s Omniverse platform for AI simulations.

The announcement showcases the importance of Oracle as a key cloud hyperscaler, a fourth behind AWS, Microsoft Azure, and GCP -- though maybe not for long.

In its latest earnings report, Oracle posted $4.1 billion in quarterly revenue for its cloud services, up 45% year-over-year (y/y). Cloud sales now account for one-third of Oracle’s overall revenue. By comparison, Alphabet (Nasdaq: GOOGL) showed $7.3 billion in revenue from Google Cloud last reported quarter, up 32% y/y. AWS sales were $21.4 billion, up 20%; and Microsoft Intelligent Cloud, which incorporates Azure, posted $21.5 billion, up 18%.

Oracle’s OCI setup is designed to run NVIDIA’s AI applications on an Oracle Supercluster comprising support for up to 4,096 OCI Compute Bare Metal instances, which can process applications in parallel. The Supercluster is supported by 32,768 A100 GPUs or, on a limited availability basis, NVIDIA’s H100 GPU, which is designed for large language model inferencing.

Networking incorporates NVIDIA's BlueField - 3 DPUs along with RDMA over Converged Ethernet (RoCE), providing ultra-low latency. Options for HPC storage are also part of the deal.

Starting at Only $40K per Month

The DGX Cloud instances are costly: They start at $36,999 per instance per month. So far, though, there are some prominent takers. Biotechnology firm Amgen is using the service for drug discovery. CCC Technology Solutions, which serves the insurance industry, is using it to streamline auto claims resolution. And ServiceNow is offering its customers AI research resources powered by the service.

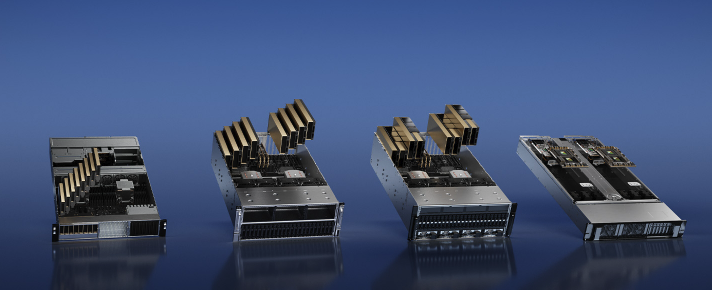

Among NVIDIA’s other announcements today was AI Foundations, a series of components meant to serve as a platform for generative AI applications. Included are the L4 Tensor Core GPU, a chip for AI video processing; the L40 for image generation in concert with NVIDIA Omniverse; the H100 for large language modeling; and the Grace Hopper platform for graph recommendation models, vector databases, and graph neural networks.

NVIDIA AI Foundations platforms. Source: NVIDIA

Today’s announcements show NVIDIA’s clear strategy to provide the technology for building big AI across a range of enterprises. It’s also interesting to see the cloud element gain importance. The combination could signal a powerful trend for industries large enough to support the costly solutions.