NVIDIA CEO Defines "Must Haves" for Data Centers.

Enterprise data centers are evolving to accommodate the growth of artificial intelligence (AI), according to Jensen Huang, CEO of NVIDIA (NVDA).

“This is an amazing time for the computer industry and the world,” Huang said during a virtual keynote for NVIDIA’s GPU Technology Conference (GTC), which is taking place online this week. “As mobile cloud matures, the age of AI is beginning.”

The importance of AI, Huang maintained, is that it automates processes that benefit human development. The current challenge is to automate the creation of software that enables this automation in multiple directions. This calls for a new model of high-performance computing. And in his keynote and other recent interviews, Huang has roughly outlined what's needed. Let’s take a closer look.

Hyperscale Datacenter Requirements

As taken from Huang’s remarks, the specific requirements of emerging intelligent data centers include the following:

Hyperscale datacenter chips. AI requires loads of CPU power, which must be offloaded via silicon for optimal performance. Not surprisingly, Huang touts NVIDIA’s own recently announced BlueField-2 Data Processing Unit and accompanying Data-Center-Infrastructure-on-a-Chip (DOCA) software as examples.

NVIDIA says that BlueField-2, based on technologies acquired with Mellanox earlier this year, can offload the work required of 125 CPUs. Huang described it as a “datacenter infrastructure processing chip” comprising “accelerators for networking, storage, and security and programmable Arm CPUs to offload the hypervisor.”

Speaking of Arm, NVIDIA’s focus on powerful components led to its recent commitment to purchase Arm Holdings from SoftBank for $40 billion, a venture Huang apparently hopes will lead to a massive sales channel for NVIDIA.

NVIDIA isn’t alone in applying new components to accelerate AI. Broadcom (AVGO), Innovium, and Intel (INTC) also have released chips geared to hyperscale AI data center applications.

Cloud connectivity. Smart data centers require cloud connections. In this vein, NVIDIA has announced a deal with Oracle (ORCL) in which the cloud provider will offer enterprises computing instances based on the following: a bare-metal implementation of NVIDIA’s A100, an accelerator chip for AI and high performance computing (HPC) applications; NVIDIA Mellanox network interface cards (NICs) for clustering; and software stacks geared to specific AI-driven applications.

There is nothing original in this concept. Indeed, it is the basis for nearly every partnership between public cloud players and most other IT software and hardware suppliers. Clearly, Oracle and NVIDIA intend to profit from the requirements underlying the demand.

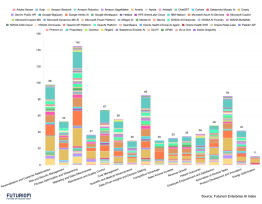

Multi-cloud networking. This is an area that, as noted in a recent Futuriom report, gets more complicated by the month, as enterprises move to support a mix of private, public, and hybrid cloud environments in a fully distributed and virtualized fashion.

Domain-specific software stacks. AI applications vary in the types of processing, virtualized components, and microservices required to develop and run them. CEO Huang said NVIDIA has partnered with key providers to offer software stacks that work with the vendor’s components for a range of applications, such as computer graphics simulations for crash testing, fluid dynamics, particle simulations, and genomics processing.

Cloud players such as Salesforce (CRM), Oracle, and SAS AB already offer application-specific service instances. But expect more permutations at the component level, as per NVIDIA, in the coming years.

A Gradual Transition

Bringing all these elements together isn’t as smooth, easy, or cheap a process as vendors such as NVIDIA describe. But enterprises seem intent on moving toward the vision, even if they do so slowly. As CEO Huang noted, today’s computing isn’t just about running software, it’s about developing it along the way.

“There are many things we like to automate. We are limited by our ability to write the software,” he said. But thanks to advances in technology, "Now software can write software.” The issue is bringing the underlying technologies together to make it happen.