IBM Steps Up in AI Inferencing with New Hardware

In a move highlighting areas of growing concern for enterprise customers, IBM announced last week a series of new Power servers and accompanying chips optimized to handle complex hybrid cloud workloads as well as AI inferencing.

The news also prompts questions about who will be interested in buying IBM's comprehensive platform. Up to now, the Power series has been largely a factor in all-IBM environments with heavy transactional workloads. But the new Power11 seems to reach beyond that.

The Power11 series includes hardware units based on IBM’s own Power11 microprocessors. Models range from high-end to midrange to entry-level. A virtual server version is also available to run in the IBM Cloud. All models, set for GA on July 25, feature zero planned downtime for system maintenance via autonomous upgrades; ransomware threat detection of under one minute; and on-chip acceleration for AI inferencing.

Installing an IBM Power S1124, one of the new Power11 systems. Source: IBM

There are many other integrations and features for the Power11, including AI-enabled code assistance, integral quantum-safe cryptography, automated energy efficiency, and links with Red Hat OpenShift AI. In Q4 of this year, the Power11 units, along with IBM mainframes and other enterprise systems, also will support the IBM Spyre Accelerator system-on-a-chip, which is designed for AI inference workloads.

IBM was careful to state that it’s not attempting to compete with NVIDIA in large-scale AI (though it’s clearly competing with NVIDIA solutions in inferencing; more on that momentarily). "[The Power11 is] not going to have all the horsepower for training or anything, but it's going to have really good inferencing capabilities that are simple to integrate,” Tom McPherson, General Manager, IBM Power Systems, told Reuters.

IBM Reaches Beyond a Niche

As noted, IBM's Power systems are known for their integration with SAP's ERP software and other large-enterprise solutions that handle business transactions. The Power11 continues to qualify as what IBM calls a "hyperscaler platform for RISE with SAP," but IBM is also aiming to expand its appeal to customers needing inference capabilities for AI applications.

Indeed, the Power11 announcement highlights a market that’s addressing the needs of large enterprises in industries such as financial services, retail, government, and healthcare that require hefty servers with hybrid cloud capabilities. Increasingly, customers also want these powerful systems to handle AI inferencing workloads, which pair enterprise data with trained models to create AI applications.

These AI inferencing systems have a range of requirements, and IBM’s announcement stresses a couple of the top ones—namely, security and high availability. Downtime is increasingly unacceptable in any AI-related workload because the platform infrastructure is so expensive. Any time lost is money lost on ROI. AI inferencing systems also must feature top-grade security and preferably some form of post-quantum protection.

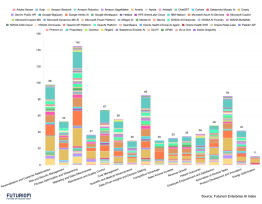

Stacking Up Against the Competition

That IBM has addressed these growing customer needs signals its intent to launch beyond its Power niche and go full bore into competition with other makers of hardware-based AI inference servers that also double as heavy-duty IT machines.

Hewlett Packard Enterprise (HPE), not to be confused with NVIDIA OEM HP Inc., offers the HPE ProLiant Gen12 series, which features models based on Intel Xeon, NVIDIA GH200, or AMD EPYC compute processors and which work with NVIDIA and AMD GPUs.

Dell Technologies offers its Dell PowerEdge AI Servers, which, like some of HPE’s, are based on Intel Xeon and AMD EPYC processors. Dell’s AI servers are equipped with a range of management tools enabling the automation of upgrades and optimized energy consumption.

Though IBM isn't aiming to compete directly with NVIDIA with the Power11, it will probably bump up against that vendor's DGX Spark “personal supercomputer,” expected to be released this month via products from OEMs Acer, ASUS, Dell Technologies, GIGABYTE, HP Inc., Lenovo, and MSI. The DGX Spark comes equipped with access to NVIDIA NIM microservices and NVIDIA Blueprints, both aids in inferencing and AI application development. Notably, though, the DGX Spark isn't meant to be a general-purpose IT server in addition to its AI duties.

Inference Server Software

IBM's Power11 inference support will also compete against a growing roster of software-based inference servers, which can be implemented on a variety of hardware platforms. These include NVIDIA’s Triton Inference Server, which features a model repository and algorithms designed to streamline AI inferencing. The server software works with numerous chips and GPUs and supports a variety of query types, enlarging an enterprise’s field of choice for incorporating their data into specific applications.

Another software-based solution is Red Hat’s AI Inference Server, comprising software specifically aimed at streamlining the inference process. It works with hybrid cloud environments and compresses large language models (LLMs) to reduce computing requirements.

There are many other inferencing aids, including the Cloudera AI Inference Service; a range of platforms from public cloud providers, including IBM Cloud; and a plethora of open-source tools.

Clearly, though, IBM is banking on customers’ desire to have more than AI inferencing in their IT systems. In the process, the company is seeking to broaden the reach of systems that up to now have served a large enterprise niche restricted to "Big Blue shops."

Futuriom Take: With its new Power11 servers and chips, IBM aims to break out of a longstanding niche into a growing market that consolidates high-end IT features for complex workloads with support for AI inference.