Cloud Providers Rush to Offer Generative AI

Generative artificial intelligence (generative AI), as the name implies, generates information from input data, and it is taking tech markets by storm. Cloud providers are responding with an array of offerings for corporate consumption. At the same time, they face challenges of expansion, security, and accountability.

To review: Through the use of vast swaths of data called foundation models, generative AI programs are trained to recognize human language and respond by generating answers in text, images, audio clips, music, videos, and even computer code. The process requires billions of conditions and associations to be fed to algorithms to create the models, which in turn can be applied to enterprises applications for a range of uses.

For example, OpenAI’s GPT-3 is the basis for ChatGPT, which creates textual answers in response to text-prompted questions or fragments – earning it the nickname “chatbot.” OpenAI also has launched GPT-4 to generate text from images as well as text.

There are many other models emerging, such as Claude from Anthropic, a chatbot that performs many of the same functions as ChatGPT; Stability AI's Stable Diffusion, which creates images from text; and AI21 Studio, a so-called language model from AI21 for developing text-based applications from text input. Application programming interfaces (APIs) associated with foundation models help developers adapt generative AI for specific enterprise purposes.

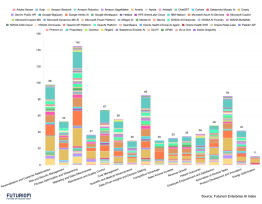

Cloud Providers Pick Their Generative AI Partners

To access the rest of this article, you need a Futuriom CLOUD TRACKER PRO subscription — see below.

Access CLOUD TRACKER PRO

|

CLOUD TRACKER PRO Subscribers — Sign In |