NVIDIA CEO Unveils New Generative AI Solutions

Generative artificial intelligence (AI) has ushered in a new era of computing, resulting in a displacement of the compute models we’ve used for decades. That was the message hammered home yet again by Jensen Huang, CEO of NVIDIA (Nasdaq: NVDA) during a keynote speech at the SIGGRAPH 2023 conference in Los Angeles this week.

“The generative AI era is upon us,” said Huang. “The iPhone moment of AI if you will, where all of the technologies of artificial intelligence came together in such a way that it’s now possible for us to enjoy AI in so many different applications.”

As he’s done repeatedly of late, Huang reiterated his message that accelerated computing – using specialized hardware in graphics processing units (GPUs) to offload tasks that can bog down CPUs – is at the heart of the new paradigm. Older, CPU-centered models, what Huang calls general-purpose computing, aren’t cutting it. “General-purpose computing is a horrible way of doing generative AI,” Huang said.

NVIDIA founder and CEO Jensen Huang. Source: NVIDIA

New Chips on the Horizon

Companies engaged in training large language models (LLMs) and conducting inferencing won’t argue with Huang’s thesis. Cloud hyperscalers and makers of so-called frontier models for generative AI (ChatGPT, LLaMA, etc.), including companies such as OpenAI, Google, Meta, Microsoft, and Anthropic, have been hoovering up NVIDIA’s chips to achieve the processing power AI tasks require. This has led to a shortage of the most popular NVIDIA components – including the A100 and H100 – and to the rise of CoreWeave and other companies renting scarce NVIDIA resources.

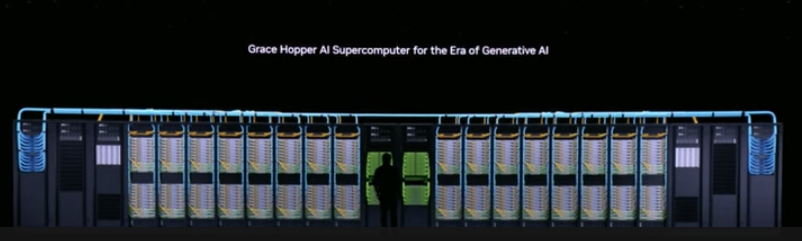

Perhaps the shortage could be reduced next year, when NVIDIA releases its latest high-end GPU, the GH200, a Grace Hopper Superchip with an integral HBM3e processor, a new form of computer memory that delivers a total of 10Tb/s of combined bandwidth, supercharging the performance of the GPU platform. The chip is expected to be released in Q2 of 2024.

Huang (silhouetted below) stood in front of a true-to-size picture showing how the GH200 can be packed into racks of interconnected components to achieve a massive supercomputer:

Source: NVIDIA

NVIDIA Hugs Hugging Face

Huang also made several announcements that will help put generative AI into broader use. Topping the list is an agreement with startup Hugging Face that allows that vendor’s hub of open-source machine learning tools to run on NVIDIA's DGX Cloud supercomputing environment. Hugging Face will offer the resulting “Training Cluster as a Service” sometime within the next few months, the vendors said.

NVIDIA also will give developers access to Hugging Face, GitHub, and NVIDIA’s own enterprise developer portal, NGC, via a new NVIDIA AI Workbench. This platform gives a broader range of application creators access to generative AI models and data repositories that allow them to customize those models and deploy them on DGX.

Don’t Forget Omniverse

Huang also touted a new release of NVIDIA’s Omniverse virtual world and digital twin creation platform that works with Pixar’s Universal Scene Description (OpenUSD or USD), a series of tools that allow for the creation and management of 3D virtual worlds. Huang noted that the release is aimed in particular at heavy industry applications and that Volvo Cars and BMW are customers.

During his talk, Huang repeated his mantra about the value of NVIDIA components: “The more you buy, the more you save,” he quipped, referring to improvements in size, power consumption, and efficiency with progressively more capable GPUs. Hopefully, supply will meet demand as the vendor continues to grow its roster of AI solutions.

Futuriom Take: NVIDIA enjoys an enviable lead in the components required to process generative AI applications. As the market grows, NVIDIA will be challenged to make best use of scarce resources while expanding its ecosystem of partnerships to foster faster and better solutions. This week’s announcements are major steps in that direction.