NVIDIA Leads Trend in Generative AI

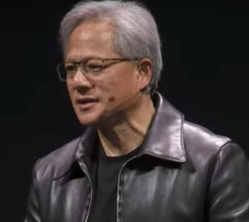

It’s been a watershed week for NVIDIA (Nasdaq: NVDA). Strong quarterly results on Wednesday, May 24, were capped with a higher-than-expected revenue outlook that sent shares soaring. As the market opened Tuesday, May 30, the AI component supplier had hit a $1 trillion market capitalization. Not surprisingly, CEO Jensen Huang was given a celebrity greeting as he landed at the Computex trade show in Taipei, Taiwan, on May 29 to deliver the message that the IT world has shifted on its axis once again – and NVIDIA’s in the middle of it all.

“There are two fundamental transitions happening in the computer industry today,” he told a Computex keynote audience Monday. “Accelerated computing and generative AI.”

According to NVIDIA, accelerated computing is “the use of specialized hardware to dramatically speed up work, often with parallel processing that bundles frequently occurring tasks. It offloads demanding work that can bog down CPUs, processors that typically execute tasks in serial fashion.” Generative AI is, of course, the ability to generate text, images, and more from natural language.

As he paced the stage in a two-hour talk, Huang described the new paradigm in more detail. Accelerated computing is the way to achieve generative AI, and the locus for that is a reimagined datacenter outfitted with new kinds of processors and networking. “The computer is the datacenter,” he said. And: “If the datacenter is the computer, then the networking is the nervous system.”

NVIDIA CEO Jensen Huang at Computex. Source: NVIDIA

Many Products, Many Announcements

The keynote was an extended presentation of NVIDIA’s products, which interact with one another in complicated and often confusing ways. Here is a partial rundown of those that support the trends of accelerated computing and generative AI:

DGX GH200. This is a supercomputer comprised of up to 256 NVIDIA GH200 Grace Hopper Superchips linked with NVIDIA’s NVLink chip interconnect technology, which can hit 600 Gb/s. According to NVIDIA’s announcement, the result is a “single data-center-sized GPU [graphics processing unit]” for building large language models (LLMs). Google, Meta, and Microsoft will be among the first customers to trial this technology, NVIDIA said. (AWS was notably missing from the lineup.)

Helios supercomputer and others. NVIDIA will link four DGX GH200s with NVIDIA's Quantum-2 InfiniBand in a massive supercomputer (location not announced). Additionally, the company is building two more supercomputers in Taiwan and one at its location in Israel. All will contain NVIDIA supercomputer chips; the Israeli one will pack the NVIDIA HGX H100.

SoftBank 5G/6G datacenters. SoftBank and NVIDIA said they will launch a platform for generative AI apps in 5G/6G networks based on the NVIDIA GH200 Grace Hopper Superchip. The new datacenters will be opened throughout SoftBank's network in Japan and will, according to NVIDIA’s announcement, “host generative AI and wireless applications on a multi-tenant common server platform, which reduces costs and is more energy efficient.”

NVIDIA Spectrum-X. NVIDIA announced technology designed to streamline the performance of Ethernet-driven cloud networks. According to the press release: “The platform starts with Spectrum-4, the world’s first 51Tb/sec Ethernet switch built specifically for AI networks. Advanced RoCE extensions work in concert across the Spectrum-4 switches, BlueField-3 DPUs and NVIDIA LinkX optics to create an end-to-end 400GbE network that is optimized for AI clouds.” This setup will be used in the Israeli supercomputer mentioned above.

There was much more in CEO Huang’s talk, which spanned the entire range of NVIDIA’s complex technologies. “We need a new computing approach, and accelerated computing is the path forward,” Huang said. But he acknowledged that the process is a complicated one that requires changes throughout the stack. “This way of doing software, this way of doing computation, is a re-invention from the ground up.”

NVIDIA is shouldering its way to the front of the line in offering solutions. But it won't be able to achieve it all alone. A complex ecosystem of relationships, such as those with SoftBank and a range of manufacturers, AI startups, service providers, and integrators will factor in achieving the goal of widespread generative AI applications. And according to experts, that will take time -- perhaps up to a decade. Still, NVIDIA's at the starting gate, and its innovations will be essential building blocks in the new computational order.