GTC Wrap: NVIDIA's Building an AI Factory for Factories

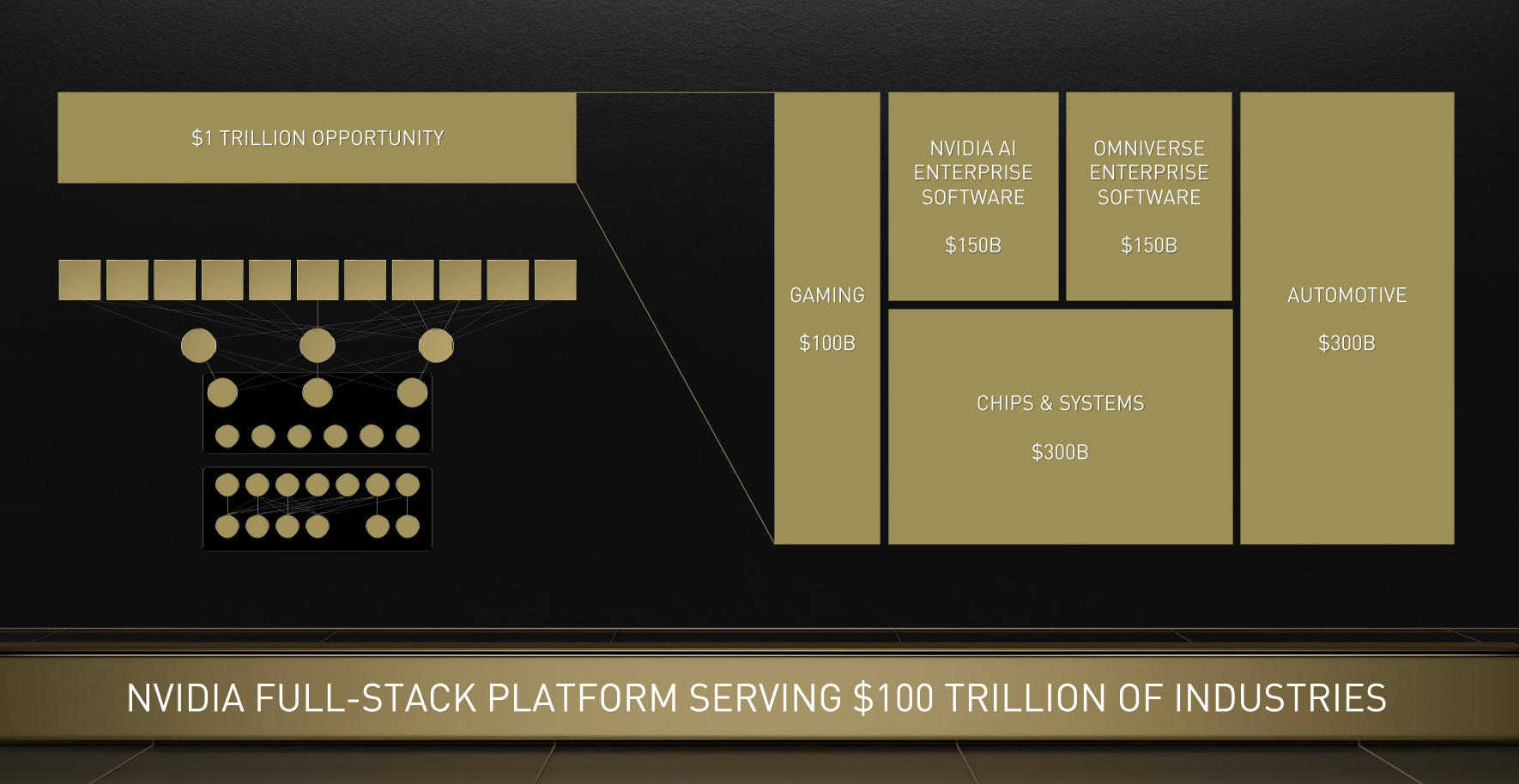

After a steady stream of remarkable product innovation and vision at its GTC event this week, NVIDIA's stock surged nearly 10% on Thursday on the heels of its announcements. The winning concept? An array of technologies, including a new GPU, CPU, chip interfaces, and networking gear designed to build the most powerful datacenters to fuel artificial intelligence (AI).

“Computer science is being reinvented as we speak,” said NVIDIA CEO Jensen Huang on a Q&A with industry analysts. “The way you write software is being fundamentally changed by AI and ML [machine learning]. Datacenters are becoming AI factories with exponentially more data and intelligence.”

Wall Street’s delayed reaction was curious, as many of the product announcements came out earlier in the week and NVIDIA's Investor Day was held on March 22, the day of the keynote. So why the late pop? It seems that investors took a while to realize that NVIDIA is setting up to storm many lucrative, adjacent cloud datacenter markets – including networking software, data processing units (DPUs), and AI software. More importantly, it has a vision of how all of this can be coordinated to make it the dominant provider of AI engines – or as CEO Huang calls them “AI factories.”

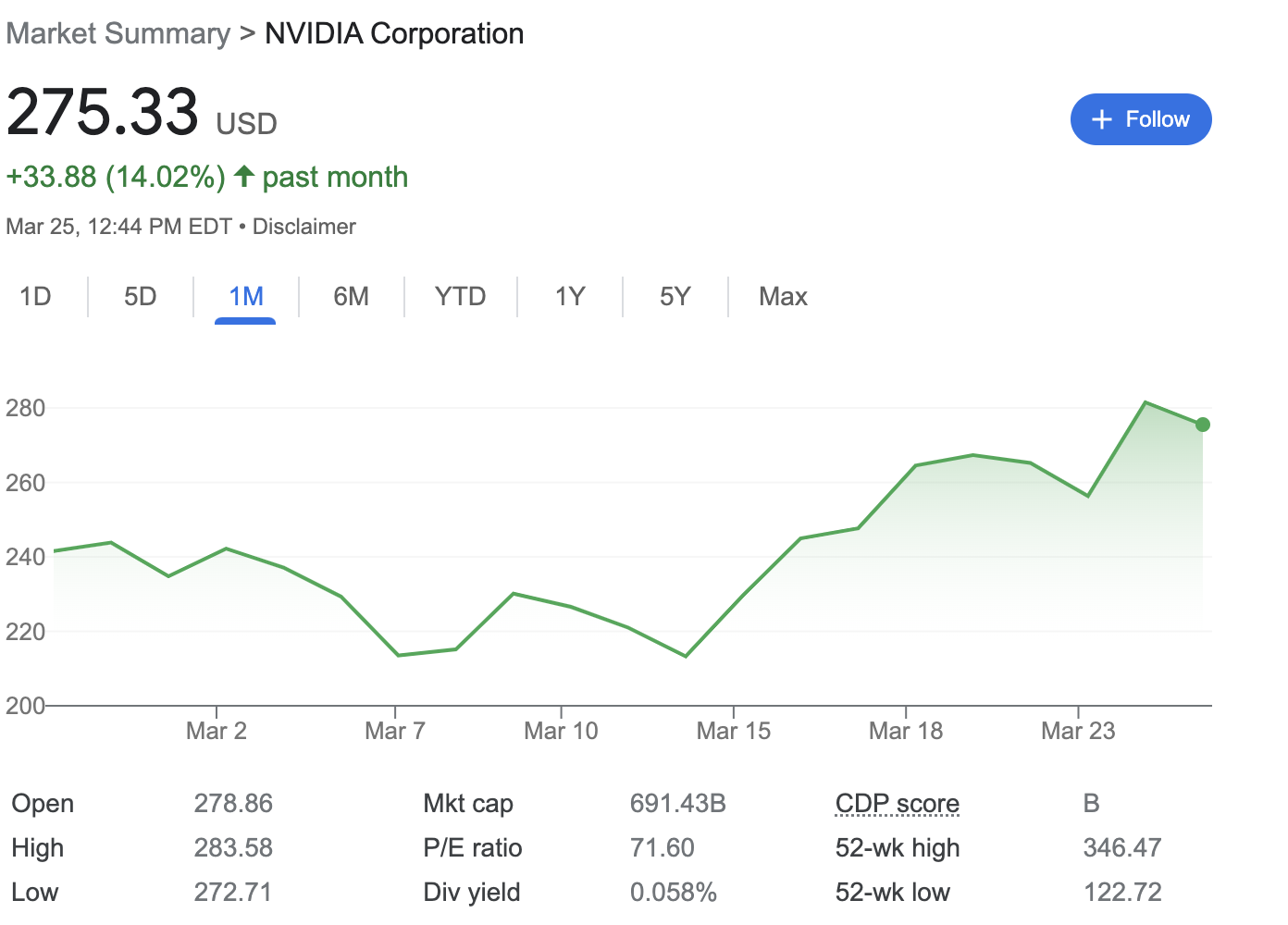

It helped that NVIDIA management outlined a $1 trillion market opportunity in presentations to investors on Wednesday. This includes what they forsee as a significant expansion of its software business, in which it would sell software tools for AI. According to investor research notes we have seen, NVIDIA described a $300 million market opportunity for this business alone, priced at $2K per CPU socket per year. That is a very attractive recurring revenue stream that got investors excited at the open on Thursday.

Huang Awes the Industry Again

Huang painted a picture of NVIDIA supplying the compute processing, networking, and even software for a wide range of new AI applications, including robotics, synthetic biology, and manufacturing – just to name a few. In his talks, he focused some of the energy on the industrial sector as a large opportunity for automation.

Indeed, in Futuriom’s recent research into datacenter hotspots, factory automation and computer vision have emerged as areas that are gaining the most traction for investment as consumers of AI, as many industries realize the business efficiencies that can be gained.

On a call with industry analysts on Wednesday, Huang described what NVIDIA does as enabling AI factories, where data analysis powers new automation initiatives. “The raw material, the data comes in, and what comes out is intelligence,” said Huang.

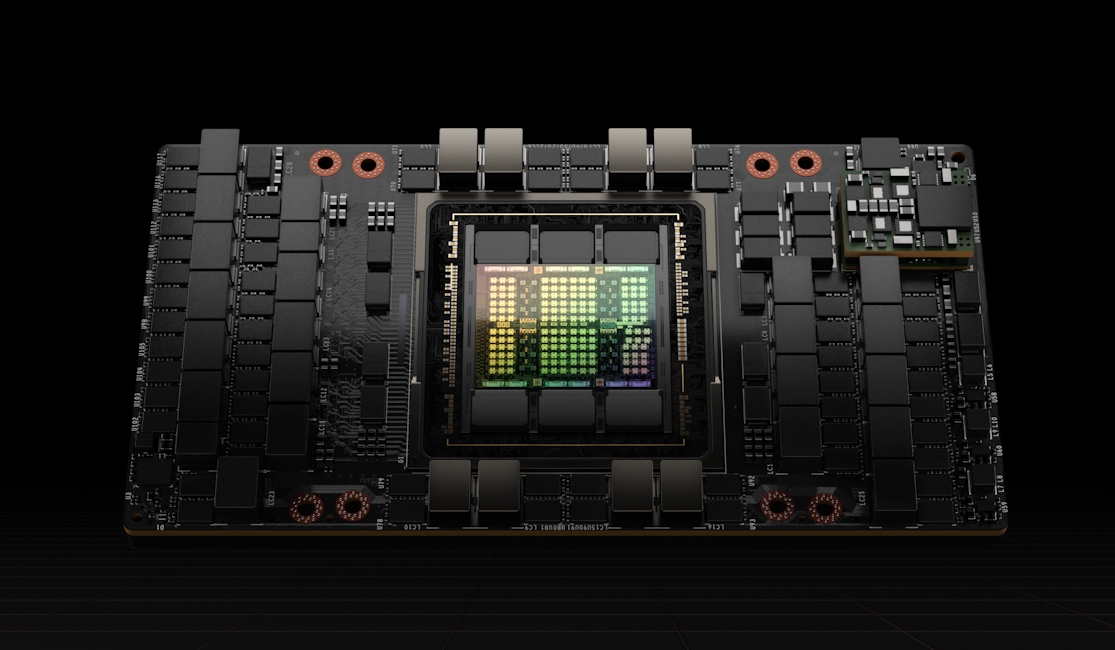

Many of NVIDIA’s announcements revolved around its GPUs and a new chip architecture called “Hopper” that enables datacenters to connect as many as 256 chips together with high-speed server interfaces for massive processing power.

This, says Huang, will enable NVIDIA to become the AI factory for “millions of factories,” including datacenters at the edge. Although NVIDIA is often a perennially expensive stock, current trading at a market cap of $658 billion on $27 billion, this premium likely reflects its track record for execution and the massive opportunity in AI – likely hundreds of billions of dollars.

NVIDIA’s GTC continues to be one of the most impressive shows produced by technology vendors. Whereas most technology vendor shows are dominated by marketing flash and fluff, NVIDIA backs the hype up with hardcore engineering and compelling customer presentations.

Then there’s Huang, who’s got to be the most charismatic and lucid speaker in the tech industry. He’s also always ahead of the curve. Ever since Huang took NVIDIA from being an obscure graphics processing company into AI cloud, the company has been innovating and beating others, including giants like Intel, into key cloud growth markets.

New Processing Power for AI

NVIDIA backed up the hype with impressive technology that shows it’s not going to lose its edge in the battle to supply processing firepower for the AI cloud.

Among the technology innovations introduced at this week's GTC:

- The NVIDIA H100 GPU Hopper architecture. Named for Grace Hopper, a computer scientist pioneer, the Hopper succeeds the Ampere architecture and includes the H100. The H100 contains 80 billion transistors, which NVIDIA claims is now the most powerful chip for accelerating applications such as AI and natural language processing. The architecture also includes a new NVIDIA NVLink, a proprietary chip interconnect that can connect up to 256 GPUs. NVIDIA describes this as "new engine of the world’s AI infrastructures." Availability begins in Q1 of 2022.

- The Grace CPU Superchip. Designed to complement the H100, this CPU is based on Arm Neoverse and comprises two CPUs connected by NVIDIA's NVLink interconnect technology. The Superchip includes up to 144 Arm cores into a single socket, delivering 1.5X the performance of the current generation, according to NVIDIA. It's designed for AI, high performance computing (HPC), cloud, and hyperscale applications.

- Open NVLink. NVIDIA’s own communications protocol designed to improve high-speed compute interconnects, NVIDIA's NVlink-C2C is designed to deliver higher performance multiprocessing than alternatives such as PCIe. NVIDIA claims it delivers 1.5X the bandwidth, 25X better energy efficiency, and significantly lower latency in multi-GPU configurations. NVIDIA is also opening up the technology to enable customer chip integration for chiplets and chip-to-chip (C2C) connections. NVIDIA also says NVLink can be used to build massively powerful switches with a capacity of 25.6 terabits per second.

- Updates to NVIDIA AI platform. NVIDIA produces a suite of software for advancing such workloads as speech, recommender system, hyperscale inference, and more, which has been adopted by global industry leaders such as Amazon, Microsoft, Snap, and NTT Communications. This free suite of software includes SDKs for developers to help them build AI and other applications more quickly.

- Omniverse for Developers. NVIDIA's suite of announcements wouldn't be complete with its own spin on the metaverse -- NVIDIA Omniverse. The company announced a new Omniverse package for developers that enables them to collaborate on projects for markets such as gaming and design. Omniverse is a multi-GPU-enabled open platform for 3D design collaboration and real-time physical simulation. In other words: chips for the metaverse!

- NVIDIA introduced a 400-Gbps end-to-end networking platform, NVIDIA Spectrum-4. This consists of the Spectrum-4 switch family, NVIDIA ConnectX-7 SmartNIC, NVIDIA BlueField-3 data processing units (DPUs), and NVIDIA DOCA datacenter infrastructure software. This follows up NVIDIA’s announcement of a new partnership with Pluribus Networks last week, indicating that it’s ready to charge into the network market with a full suite of powerful network solutions, including an array of networking software, high-performance DPUs, and its own switch family.

- But wait... there's more. NVIDIA also announced Orin, a ARI computer targeted at robotaxis and other autonomous vehicles. And it's DRIVE autonomous vehicle software will start shipping in Mercedes-Benz cars starting in 2024.

Anything else? Basically NVIDIA now touches most of the elements in the hottest AI and automation markets.

Overall, NVIDIA continues to impress – not only on the typical vendor conference hype marketing level, but on the technical level. I found it interesting that the stock reaction was muted and delayed, but it appears to me that NVIDIA is preparing several initiatives to expand in significant growth markets, including datacenter software and networking.

For now, NVIDIA appears to be emerging as the leader of the AI factory for factories.